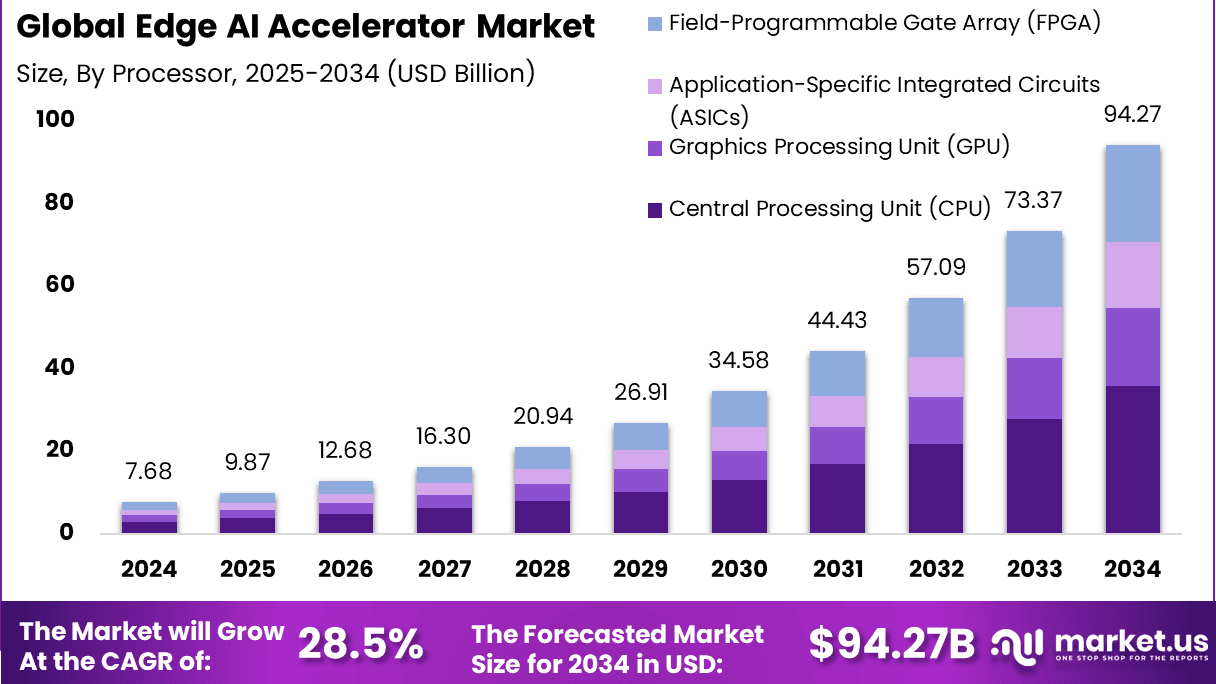

The Edge AI Accelerator Market refers to a specialized segment within the broader AI hardware industry that focuses on accelerating artificial intelligence computations directly on edge devices, such as smartphones, drones, industrial robots, or surveillance cameras. Instead of sending data to the cloud, these accelerators enable faster, localized processing, enhancing speed, data privacy, and energy efficiency. These chips are designed to handle complex AI tasks like object detection, voice recognition, and machine vision in real time, even with limited connectivity. As industries strive for more autonomy and real-time responsiveness, edge AI accelerators are becoming an essential technological cornerstone.

Read more - https://market.us/report/edge-ai-accelerator-market/

The Edge AI Accelerator Market is gaining massive traction as demand surges across automotive, healthcare, manufacturing, and consumer electronics. Businesses are increasingly investing in these solutions to reduce latency and dependence on cloud infrastructure. With smart city projects, autonomous systems, and industrial automation rising rapidly, the market is being pushed toward widespread adoption. Enhanced computational needs, real-time insights, and the necessity for secure data handling at the device level are driving both supply and demand upward, creating a highly competitive and innovation-driven ecosystem.

One of the top driving factors is the global shift toward decentralized computing, where enterprises seek to minimize reliance on cloud servers by embedding intelligence directly into devices. Demand is also fueled by growing reliance on AI-powered applications that require instantaneous decision-making, such as predictive maintenance and autonomous mobility. Accelerators optimized for low power and high performance are helping businesses bridge the performance gap in edge environments, where traditional CPUs fall short.

Key technologies propelling adoption include neural processing units, tensor accelerators, and custom silicon architectures designed for AI inference at the edge. The adoption of AI frameworks optimized for on-device computing, like TensorFlow Lite and ONNX, is also transforming how developers and enterprises implement AI locally. These innovations are making it feasible to deploy intelligent applications in even the most resource-constrained environments, accelerating time to market and reducing overhead costs.